What Does Trustworthy Mean? Performance, Privacy, and Causality in Machine Learning

Instructor

Michael Kamp

Description

Modern machine learning systems are increasingly used in critical applications such as healthcare, finance, and policy-making. In such settings, it is not enough for models to be accurate, they also need to be trustworthy. But what does that actually mean? In this seminar, we explore three key aspects of trustworthy machine learning: performance guarantees, privacy, and causality.

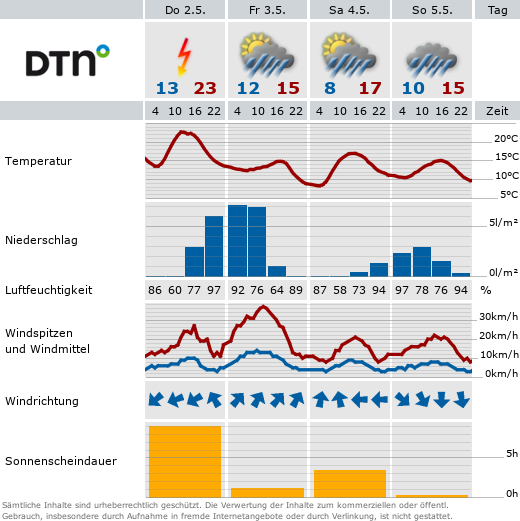

Performance guarantees go beyond empirical accuracy and aim to answer how well a learned model will generalize to new, unseen data. Classical learning theory provides some insights here, but often falls short when applied to deep learning models. Modern approaches seek new ways to explain and guarantee good generalization by analyzing the geometry of the model’s behavior or using probabilistic reasoning tools.

Privacy becomes essential when machine learning is applied to sensitive data, especially in domains like medicine. Simply removing names or IDs is not enough to protect individual information. Instead, we need principled ways to learn from data without exposing it. Key strategies are to ensure that individual contributions to the training data cannot be traced, e.g., by perturbing data before sharing it, or to design training methods that do not require centralizing sensitive data in the first place.

Causality addresses the question of why a model makes a certain prediction, and how robust that prediction is under changes to the data or the environment. Unlike standard prediction-based approaches, causal reasoning aims to uncover underlying relationships and structure in the data, which can help build models that are more interpretable and more stable under distribution shifts. Various techniques allow us to estimate causal structure directly from observational data.

In this Proseminar, participants will learn about methods that tackle these key aspects by surveying a number of key publications and critically comparing them.

Organisation

The seminar begins with a kickoff meeting at the start of the semester, where the three main topics, performance guarantees, privacy, and causality, will be introduced. Participants will then select one of these topics. Each topic will be supervised by a researcher from the organizing group and comes with a curated set of four core research papers. All participants working on the same topic will study the same papers.

Over the course of the semester, participants are expected to read and understand these papers in depth. Where necessary, they are also encouraged to consult background material and related work to fully grasp the methods and context. To support this process, researchers from the organizing group will be available throughout the semester to help participants understand the material and discuss conceptual difficulties.

Based on their topic, each participant will write a comparative survey that explains the key ideas of the four papers, highlights their strengths and limitations, and discusses how they contribute to the broader goal of trustworthy machine learning.

Toward the end of the semester, participants will present their findings in a 20-minute talk during one of several presentation sessions (depending on the number of participants). Attendance at all seminar sessions is mandatory. The final written reports are due approximately one week after the presentations.

Grades will be based equally on the presentation and the written report.

The proseminar will be taught in English.